The Rise of LiDAR Technology: Innovations and Applications Across Industries

We are pleased to continue our article series on Data Labeling for Product Managers with our contributor José Luis Muñoz! This is a longer article, but distills decades of industry and academic experiences on LiDAR technology and its applications.

TLDR

LiDAR deserves its own category in data annotation due to the unique technical complexity of LiDAR sensors. Emitting energy and capturing responses, LiDAR creates precise 3D representations. LiDAR technology is used in autonomous vehicles, remote sensing, and augmented reality, to name a few.

The LiDAR market is booming and is forecasted to reach $6.3B in 2027; at the same time, LiDAR sensors are decreasing in cost. In autonomous vehicles, LiDAR excels in object detection and high-definition mapping. Augmented reality benefits from LiDAR in room scanning and immersive gaming. Construction embraces LiDAR for site analysis, progress monitoring, and safety.

In this post we will double click into how LiDAR sensors work and highlight some machine learning and non-machine learning use cases.

Topics Covered

1. What is LiDAR?

2. The LiDAR Market

3. LiDAR for Autonomous Vehicles

4. LiDAR for Augmented Reality

5. LiDAR for Construction

6. LiDAR Annotation for Machine Learning

We will dive deeper into each topic in the following sections.

1. What is LiDAR?

LiDAR stands for Light Detection and Ranging and is a type of active sensor. LiDAR sensors are active, airborne sensors that emit energy and measure the response of the emitted energy. Passive sensors, on the other hand, do not emit energy but rather measure energy that is naturally available. A photo camera is an example of a passive sensor. The images below depict how a LiDAR sensor works and provide examples of active vs. passive sensors.

Terrestrial Laser Scanning (TLS) is a ground-based LiDAR method. This method is often used for surveying and mapping purposes, providing highly detailed and accurate 3D data (with millimeter accuracy) - the 3D point cloud.

A 3D point cloud is a collection of data points in three-dimensional space, and in some cases, it also includes color data (RGB) or intensity values. Each point in the cloud represents the spatial coordinates (x, y, z) of a particular location in the three-dimensional environment. The below image is a LiDAR point cloud of the Washington Monument.

By combining data from a Global Positioning System (GPS), an Inertial Measurement Unit (IMU), and LiDAR sensor data, a detailed 3D point cloud can be created. Here's how each component contributes:

GPS: Provides precise location information, giving the overall position of the sensor in a global reference frame.

IMU: Offers information about the orientation, velocity, and gravitational forces. It's crucial for correcting any movements or tilts of the sensor, especially in mobile mapping systems.

LiDAR: This sensor emits laser pulses that bounce off objects and return to the sensor, measuring the distance to various surfaces, as mentioned above. This results in a dense set of points (point cloud) representing the scanned environment.

Combined, these sensors enable the creation of highly accurate and detailed 3D representations of the environment, which are used in various applications like autonomous vehicles, geography, archaeology, forestry, and urban planning. GPS and IMU data help geo-reference and precisely orient the LiDAR point cloud in space, providing a comprehensive understanding of the environment.

2. The LiDAR Market

Yole Group sized the global LiDAR market at $2.1B in 2021 and forecasted that the market will grow to $6.3B in 2027. It is worth noting that in 2021, the largest LiDAR market segments were topography ($1.3B), manufacturing ($402M), and robotic cars ($120M). Yole Group’s 2027 LiDAR market forecast reflects that the top three market segments in 2027 will be Advanced driver assistance systems (ADAS) ($2B), topography ($1.7B), and smart infrastructure($1.1B).

Additionally, Yole Group research from 2020 references that LiDAR sensor prices have dropped dramatically. For example, the average price of the Velodyne LiDAR sensor in 2017 was $17,900. Velodyne plans to reach an average price of $600 for a LiDAR sensor in 2024.

3. LiDAR for Autonomous Vehicles

In the words of former Uber CEO Travis Kalanick, “laser is the sauce.” Kalanick is not alone in praising LiDAR as the secret sauce in the autonomous vehicle tech stack. Autonomous vehicles leverage LiDAR for object detection, high-definition mapping, lane detection, and sensor fusion. LiDAR technology is an integral component of the autonomous vehicle tech stack.

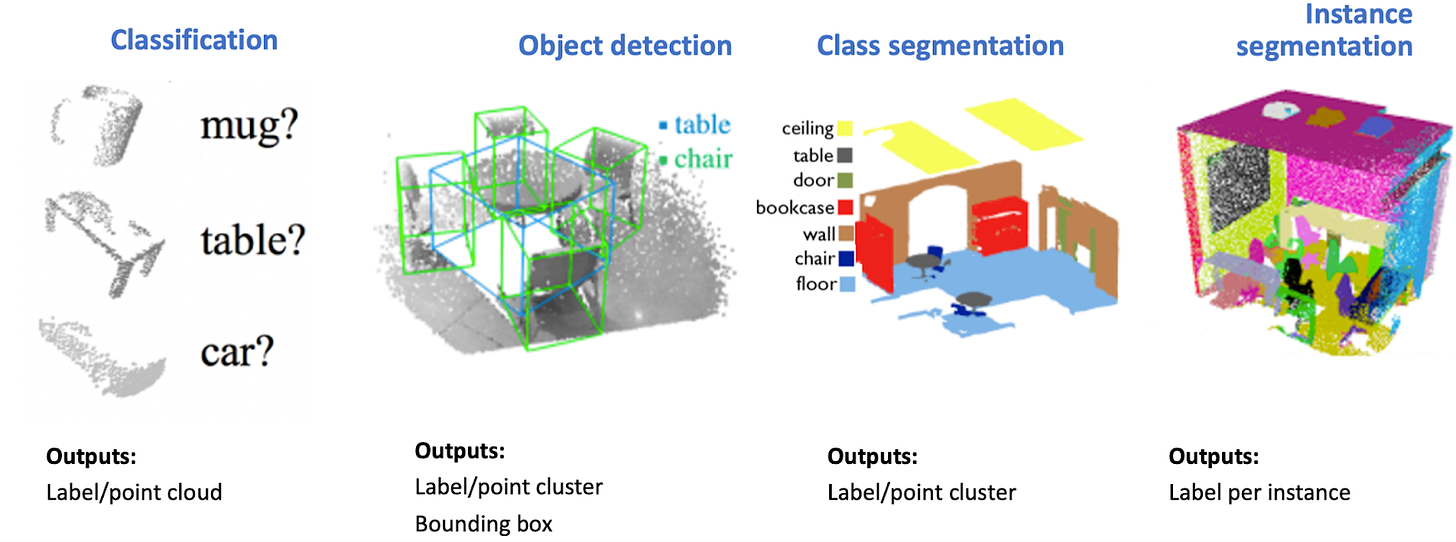

Common Machine Learning (ML) LiDAR use cases include: object detection, environment mapping (instance segmentation), localization & navigation, road condition monitoring, and pedestrian intent prediction. We will further analyze two of the above use cases: object detection and environment mapping.

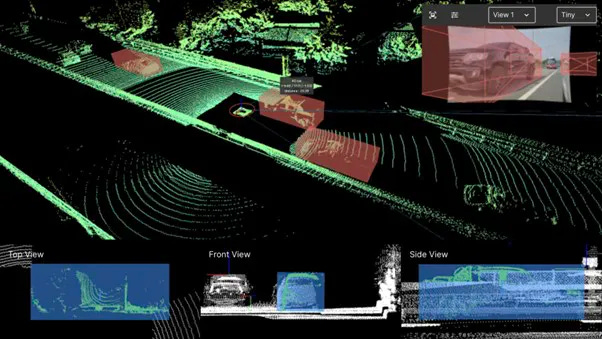

Object detection - LiDAR sensors can be used to detect objects as well as track objects. The primary goal of object detection in ADAS (Advanced Driver-Assistance Systems) is to identify various objects, such as other vehicles, pedestrians, cyclists, road signs, and obstacles. This information is used to warn drivers of potential hazards, automate certain driving functions (like braking or steering), and improve road safety. The object detection process can be described in the following three main steps after LiDAR data acquisition:

Data preprocessing & feature extraction: The raw point cloud data is often noisy and unevenly distributed. Preprocessing steps like filtering, downsampling, and ground removal are applied to clean and organize the data for more effective processing. Feature extraction can involve transforming the point cloud into a format more suitable for processing, like a 3D voxel grid or a bird's eye view projection.

Object Detection models: Advanced deep learning models like Convolutional Neural Networks (CNNs) or PointNet are deployed to detect objects within the point cloud. These models are trained to recognize patterns corresponding to different predefined object categories (such as cars, pedestrians, etc.).

Post-processing: The output from the model typically includes bounding boxes or other shapes that encapsulate the detected objects. Each bounding box is associated with a class label (indicating the type of object) and may include additional information like the confidence score of the detection.

Object detection glossary

Bounding boxes are the most common output and represent the location and size of the detected objects in the 3D space. In a point cloud, these boxes are often 3D, showing the width, height, and depth of the object.

To dig deeper into bounding boxes for computer vision, see this blog post from Aya Data.

Class Labels: Each bounding box comes with a label indicating the category of the detected object (e.g., vehicle, pedestrian, traffic sign).

Confidence Scores: The model also outputs a confidence score for each detection, indicating how certain it is that the bounding box contains an object of the predicted class.

Integration with ADAS: The detected objects and their characteristics are then integrated into the ADAS system, which uses this information for various functions such as collision warning, automatic emergency braking, and adaptive cruise control.

Some ADAS systems using object detection technologies are Tesla’s Autopilot (Reference: https://ai.plainenglish.io/decoding-the-technology-behind-tesla-autopilot-how-it-works-af92cdd5605f), Waymo Driver (Reference: https://waymo.com/waymo-driver/ ), Mobileye (Reference: https://www.mobileye.com/technology/).

High-definition environment mapping - Much like aerial LiDAR collection, which provides insights into the features of the ground below, LiDAR sensors mounted on data collection vehicles can be used to produce high-definition maps of road features and their surrounding environment. Birds Eye View (BEV) LiDAR provides data that allows one to identify features such as lane markings.

Environment mapping in the context of ADAS involves creating a detailed representation of the vehicle's surroundings. This process is crucial for enabling various ADAS features, especially in semi-autonomous and autonomous vehicles. Here are the key steps in the process:

Data Collection: The first step involves gathering data from various sensors equipped on the vehicle. These typically include LiDAR, cameras, radar, ultrasonic sensors, and sometimes GPS. Each sensor type provides different kinds of data, such as distance measurements, visual imagery, and location information.

Sensor Fusion: Data from different sensors are combined or fused to create a more comprehensive and accurate picture of the environment. Sensor fusion algorithms are used to integrate and synchronize data, addressing the limitations of individual sensors and leveraging their combined strengths.

Feature Extraction: This involves analyzing the sensor data to identify key features of the environment, such as road lanes, traffic signs, other vehicles, pedestrians, barriers, and general topography. Advanced algorithms, often ML-based, are used for this purpose.

Localization: The system determines the vehicle's precise location within the environment, often using GPS data in conjunction with local features identified from sensor data. This step is crucial for path planning and navigation.

Mapping: The processed data is used to generate a map of the surrounding environment. This can be a dynamic 3D map, which is continuously updated in real time as the vehicle moves.

The outputs of environment mapping in ADAS include:

Dynamic 3D Maps: Real-time 3D representations of the vehicle's surroundings.

Object and Event Data: Information about detected objects and events, such as the location, speed, and trajectory of other vehicles, pedestrians, and any relevant road signs or signals.

Environment mapping glossary

Instance segmentation: LiDAR instance segmentation precisely identifies and outlines individual objects, such as vehicles, pedestrians, and road signs, providing detailed shapes and positions within the 3D space. Instance segmentation captures elements of both object detection and class segmentation, where a 3D point cloud is segmented into point clusters that have the same instance label since they belong to the same object. For example, points belonging to two different vehicles will be in two instance point clusters, although they belong to the same vehicle class.

By integrating this detailed object data into the environment mapping process, the ADAS system gains a richer and more nuanced representation of its surroundings. This integration ensures that the system not only has a comprehensive map of the environment but also possesses intricate details about each object within it, enhancing navigation accuracy, obstacle detection, and overall driving safety.

Interaction with Vehicle Systems: The mapping and path planning outputs are used to inform and control various vehicle systems, like steering, acceleration, and braking, especially in semi-autonomous or fully autonomous driving modes.

4. LiDAR for Augmented Reality

Snapchat was an early adopter of LiDAR technology in 2020 when the iPhone 12 was launched to enhance the app’s popular Augmented Reality (AR) experiences. Other AR applications for LiDAR technology include scanning a room, shopping for home furniture, and immersive gaming.

Here are some example products for each of the above use cases:

AR Applications for Room Scanning:

Ikea Place: This app allows users to visualize how furniture and products would look and fit in their homes. Utilizing AR and LiDAR, Ikea Place can accurately assess the dimensions of a room and overlay 3D models of furniture.

Canvas: Developed by Occipital, Canvas uses LiDAR for 3D laser scanning of rooms. It then enables users to create accurate 3D models of their spaces, which can be useful for interior design and remodeling projects.

Shopping for Home Furniture:

Wayfair: The Wayfair app incorporates AR features to help users visualize how furniture and decor will look in their actual space. With the integration of LiDAR, this experience is enhanced, providing more accurate sizing and fit within a room (Reference: https://www.aboutwayfair.com/augmented-reality-with-a-purpose#:~:text=Enabling%20higher%20senses%20of%20realism,virtual%20products%20in%20their%20home. )

Houzz: The Houzz app includes an AR feature called "View in My Room 3D" that lets users preview products in their homes before purchasing. The use of LiDAR technology could improve the accuracy and realism of these visualizations.

Immersive Gaming:

Pokémon GO: While not using LiDAR, Pokémon GO is an example of an AR game that could benefit significantly from LiDAR technology for more immersive gameplay, offering more realistic interactions with the environment.

AR Dragon: An AR game where you take care of a virtual pet dragon. Implementing LiDAR could enhance the interaction between the virtual pet and the real world, making the experience more engaging.

Other Notable Startups and Products:

Spatial: Specializes in using AR for collaborative workspaces. With LiDAR, Spatial's platform could offer more immersive and interactive virtual meetings, with participants interacting in a shared virtual space.

Magic Leap: Known for its AR headset, Magic Leap could leverage LiDAR for enhanced spatial awareness and interaction in various applications, from gaming to professional use.

These examples illustrate the expanding role of LiDAR in AR, enhancing experiences from home design and shopping to gaming and professional collaboration. As this technology continues to evolve, we can expect to see even more innovative applications in various sectors.

5. LiDAR for Construction

LiDAR data has become increasingly valuable in the construction industry, offering precise, high-resolution 3D information essential for various construction project stages. Here are some key use cases:

Construction site analysis and planning: Before construction begins, LiDAR can be used to create detailed topographical maps of the site. This data helps in planning the layout, understanding the terrain, and identifying potential issues like uneven ground or water accumulation areas. Example startups in this space are Avvir (Source: https://www.avvir.io/solutions) and Fieldwire (Source: https://www.fieldwire.com/construction-management-software/) acquired by Hilti.

Construction progress monitoring: By regularly scanning the construction site with LiDAR, project managers can monitor progress against the plan. This includes checking if the constructed elements are in the right place and the right shape, and identifying any deviations from the planned design. Startups operating in this space are Reconstruct (Source: https://reconstructinc.com/) and Doxel (Source: https://doxel.ai/product) and data capture providers such as Boston Dynamics.

Generation of Digital Twins: A digital twin is a digital representation of a physical object or system that replicates its state and relationships with other entities throughout its lifecycle. Multiple data sources can be combined to generate a digital twin such as (a) LiDAR data that represents the 3D geometry of the physical infrastructure and (b) real-time sensor data (e.g., IoT). The end users have the capability to (1) update, maintain and communicate with the digital twin, (2) leverage the digital twin to monitor the asset’s performance and (3) improve decision-making by planning interventions well before the time of need. A comprehensive review of Digital Twins techniques and algorithms in the construction and manufacturing industries is available in Eva’s Ph.D. Thesis (Reference: https://www.repository.cam.ac.uk/items/3819fa32-a583-440f-8807-c7e51b75595e)

Safety analysis: By analyzing LiDAR data, potential safety hazards on a construction site can be identified and addressed. This includes monitoring slopes for stability, ensuring safe distances from machinery, and verifying that structures are secure.

As-Built Documentation: After construction completion, LiDAR can be used to create detailed as-built documentation. This documentation serves as a precise record of what was actually built and is crucial for future renovations, maintenance, and legal records.

Reality capture and integration with Building Information Modeling (BIM) (known in the construction industry as Scan-to-BIM): LiDAR data can be integrated into BIM systems, enhancing the BIM model with accurate, real-world data. This integration is vital for project planning, design validation, and lifecycle management. Some startups in this field include Openspace (Source: https://www.openspace.ai/ ), Aurivus (Source: https://aurivus.com/) and ReScan360 (Source: https://rescan360.com/).

3D mapping and photorealistic rendering: Geospatial platforms use the latest in satellite and aerial imagery to extract comprehensive insights on a global scale. The integration of AI not only enriches missing attributes in the data, but also aids in creating detailed, photorealistic 3D mapping representations.

The backbone of such platforms is their robust cloud-computing infrastructure, which enables rapid and regular updates, ensuring data remains up-to-date and reliable. This is especially crucial for dynamic fields that require real-time information. The convergence of advanced imaging technology, AI, and cloud computing in these platforms provides a powerful toolset for a variety of enterprise solutions, revolutionizing the field of geospatial analysis and its applicability in different sectors.

6. LiDAR Annotation for Machine Learning

LiDAR point cloud files are large and thus require computers with high-performance specifications and high-speed bandwidth if files are to be downloaded. An individual LiDAR file can range from several hundred megabytes to multiple gigabytes. The tactical implications are that crowd-sourced data annotators may not have the appropriate specialization, required hardware, or internet connectivity to label LiDAR data effectively.

There are several key best practices and challenges to consider when annotating LiDAR data:

Scalability and Volume: Due to the large volume of LiDAR data, annotation can be challenging. Automated or semi-automated annotation methods using machine learning algorithms can speed up the process.

Subjectivity and Variability: To reduce variability and ensure reliable annotations, it's important to establish clear rules and conduct regular training sessions.

Complex Data and Occlusion: LiDAR data can include complex scenes with obscured objects. Approaches like multi-view annotation or 3D cuboid annotations can help label objects accurately in these situations.

Data Labeling Bias: To avoid bias, it's recommended to use multiple annotators, inter-annotator agreement metrics, and regular quality checks.

Tool Selection: Selecting the right annotation tool is crucial. Tools with features like 3D annotation and integration with LiDAR software operations are essential for effective annotation. Example tools for LiDAR annotation are Labelbox and SuperAnnotate.

Data Security and Privacy: Ensuring compliance with data protection laws and implementing data anonymization and encryption techniques are important for handling sensitive information.

When using deep learning algorithms on LiDAR data, neural networks have shown effectiveness, especially for tasks like classification and class segmentation. However, challenges such as variability in scanning conditions, sensor noise, and data incompleteness should be addressed. Diverse architectures, like point cloud-based, voxel-based, graph-based, and view-based methods, are used to handle these challenges.

Conclusion

We have seen how LiDAR is currently reshaping diverse industries ranging from autonomous vehicles, Augmented Reality, and construction. LiDAR's versatility knows no bounds. Decreasing the cost of LiDAR sensors presents an opportunity for new use cases to emerge.

👉 What use cases for LiDAR come to mind for you?

As we navigate the evolving landscape of technology, LiDAR stands as a catalyst, propelling innovation and unlocking opportunities.

🎯 If you like this post, please refer your friends to subscribe using our referral program!