The AI Value Chain Ecosystem

Beyond the Hammer Approach: Crafting a Strategic Moat in AI investment

In this post, we explore the key players in the AI value chain ecosystem, which is continuously evolving. Before we do so, it is important to set the stage by saying that generative AI companies have not yet proven to be profitable.

Some quick facts:

OpenAI is on track to be the most capital-intensive startup in Silicon Valley history. A recent interview in the Financial Times reveals that OpenAI seeks another round of investment by Microsoft.

$540mm in losses (2022) and $10bn raised by Microsoft

current annual revenue is estimated to reach ~$1.3bn from subscriptions

assuming $300mm for staff & G&A costs plus ~$2bn training costs spent on GPT-5

expected 2023 annual losses are close to $1bn

Anthropic, co-founded by OpenAI executives, is close to securing a $5bn valuation backed by Google and Amazon.

$200mm ARR in 2023

$350mm annual spend in 2023

It will be interesting to see how the generative AI landscape will be shaped with these two major foundational model providers growing in the next couple of years.

TLDR

As product leaders, we are constantly challenged to invest in ideas that have sustainable ROIs and NPVs and in general, how to run profitable product systems rather than scaled systems at loss. It is clear that we are still in what I call the “wow” effect mode of these technologies, and it is critical to evaluate how foundational model providers and other partners can assist in creating a complete product that delivers its value to the end customers without incurring such high costs. Understanding the AI ecosystem is essential for product leaders to navigate the challenges and opportunities presented by the rapidly evolving AI industry.

We should think about:

👉 How can AI companies strike a balance between groundbreaking innovation (“wow” effect) and the practicalities of profitability?

👉 What are the ways generative AI can be utilized to forge sustainable business models?

Let’s dive into the AI value chain and why it matters.

The AI value chain landscape

A value chain is a series of specialized business entities, whose combination is a value-generating product. A technical AI product encompasses a series of stages from initial research and development, through computing infrastructure setup, rigorous testing, product integration, marketing, distribution, to customer support and continuous improvement feedback loops.

Thought-provoking questions:

👉 What strategies are effective in optimizing each stage of the AI value chain?

👉 How can AI product leaders ensure cohesive integration across the value chain?

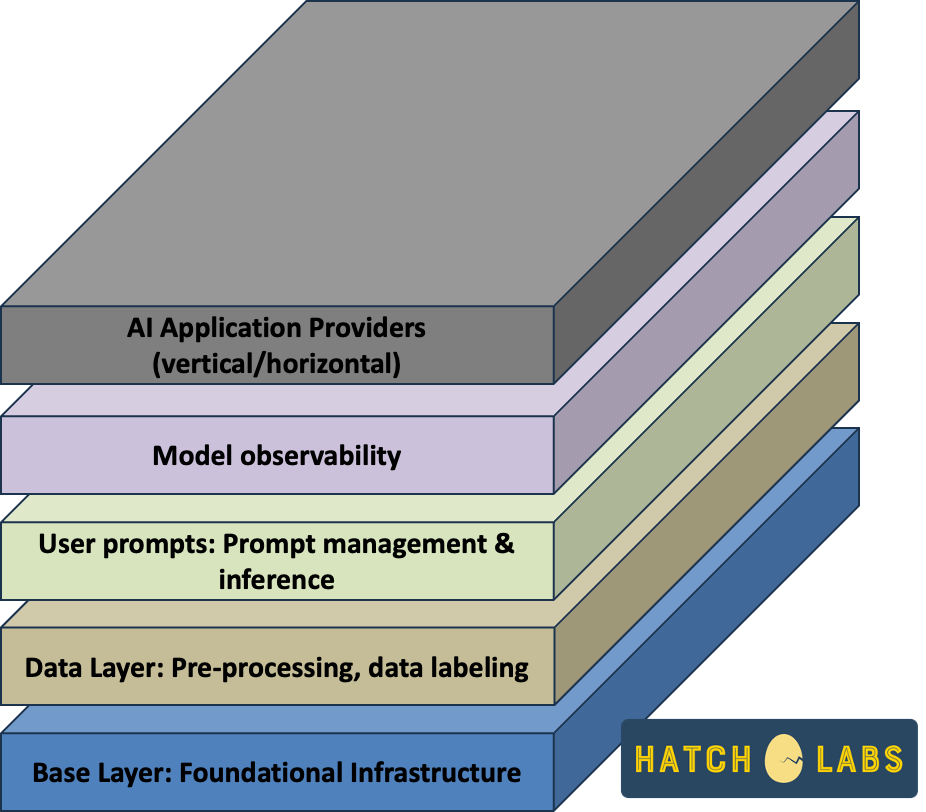

In the generative AI landscape, we can group the layers needed for building scalable technical products into the following categories:

Base layer: Foundational Infrastructure

Data layer: Pre-processing, data labeling

User prompts: Prompt management and inference

Model observability

AI application providers:

vertical applications

horizontal applications

Base layer: Foundational Infrastructure

These players include research-focused startups, which design, build and refine foundational models and large MAANG companies, who provide the necessary infrastructure for using these models at scale. There are lots of models from open-source (such as Llama 2 or Mistral) to APIs and fine-tuning providers (such as OpenAI). Infrastructure providers are the key players such as AWS, Azure and Google Cloud, who dominate the space. These providers also support in MLOps pipelines. It is important to define the requirements for this pipelines for scalable systems and work hand-in-hand with a dedicated MLOps team.

Companies such as Baseten and Modal are revolutionizing the deployment of machine learning models by offering server-less remote environments. These platforms provide exceptional performance and cost efficiency, significantly reducing the complexity traditionally associated with manual deployment and inference processes. By eliminating the need for manual configuration of Kubernetes, server provisioning, permissioning, and autoscaling, they streamline the entire workflow, making it more accessible and less time-consuming.

Modular, Lightning ML, and OctoML are innovating in machine learning inference. Modular offers customizable and scalable inference solutions. Lightning ML excels in fast, real-time inference processing. OctoML optimizes models for performance across various types of hardware, ensuring efficiency and cost-effectiveness. These companies collectively advance the field of ML inference with user-friendly and versatile technologies. It depends on the product we want to build on which provider we would need to partner with for delivering the value of our product.

Thought-provoking questions:

👉 What criteria are essential in choosing foundational infrastructure providers for AI projects?

👉 How do advancements in foundational infrastructure influence strategic planning for AI product leaders?

Data layer: Pre-processing, data labeling, databases

Before being utilized by an LLM, data requires meticulous pre-processing. This crucial step involves extracting context from various organizational data repositories, converting the data into a format that's compatible with the LLM, and efficiently loading it into the model's context window or a vector database. This process is essential to ensure the rapid response time that users expect when interacting with an LLM.

There are 4 main categories of data infrastructure providers that we need to be aware of depending on the application and desired scalability of LLM product:

data pipelines & experiment management providers

data labeling companies

databases (data lakes, metadata storage, context cache)

vector databases

Data pipeline providers are important for managing data workflows and experiments, facilitating the training and deployment processes of LLMs.

We discuss data labeling processes and providers on separate posts.

Other than traditional databases and data lakes, there are specialized databases designed to handle vectorized data. The main providers there are Pinecone and elasticsearch. They enable efficient storage, data querying and retrieval of high-dimensional data, which are commonly used for search and recommender systems. When used in combination with LLMs, we have the Retrieval Augmented Systems (RAG) that we discuss on another post.

User prompts: Prompt management and inference

Prompt management involves the strategic creation, organization, and optimization of prompts to effectively harness the capabilities of LLMs. Companies like PromptLayer specialize in constructing complex prompts to extract nuanced responses from LLMs, enhancing user interactions. Vellum, on the other hand, focuses on refining the interface for LLM interactions, making it more intuitive and user-friendly. Text.Cortex helps to automate the generation of high-quality content, including customizations. Lastly, LangSmith streamlines the editing and refinement process of AI-generated text, ensuring clarity and coherence. Together, these tools represent the evolving landscape of AI prompt management, each contributing to a more seamless and efficient use of LLMs in various applications.

👉 It is important to look for compatibility of these interfaces with LLM providers before selecting a solution.

Model observability

When it comes to enterprise AI deployment, ensuring data governance and security for LLMs is crucial. Specialized companies like Credal, Calypso AI, and HiddenLayer play key roles in this ecosystem. Credal focuses on data loss prevention with appropriate access and security protocols, Calypso AI on content governance, and HiddenLayer on threat detection and response, collectively ensuring secure integration of internal data with AI applications. Additionally, companies like Helicone focus on open-source LLM observability, HoneyHive enhances AI data management and model observability, and Truera focuses on model explainability, quality analysis, and continuous monitoring, aiding in the development of transparent and accountable AI systems. Together, these companies form a comprehensive framework for secure, transparent, and efficient LLM and app deployment in enterprise environments.

Thought-Provoking Questions:

👉 How can AI product leaders leverage user interaction with AI systems through effective prompt management?

👉 What is the role of model observability in upholding data governance and security in AI applications?

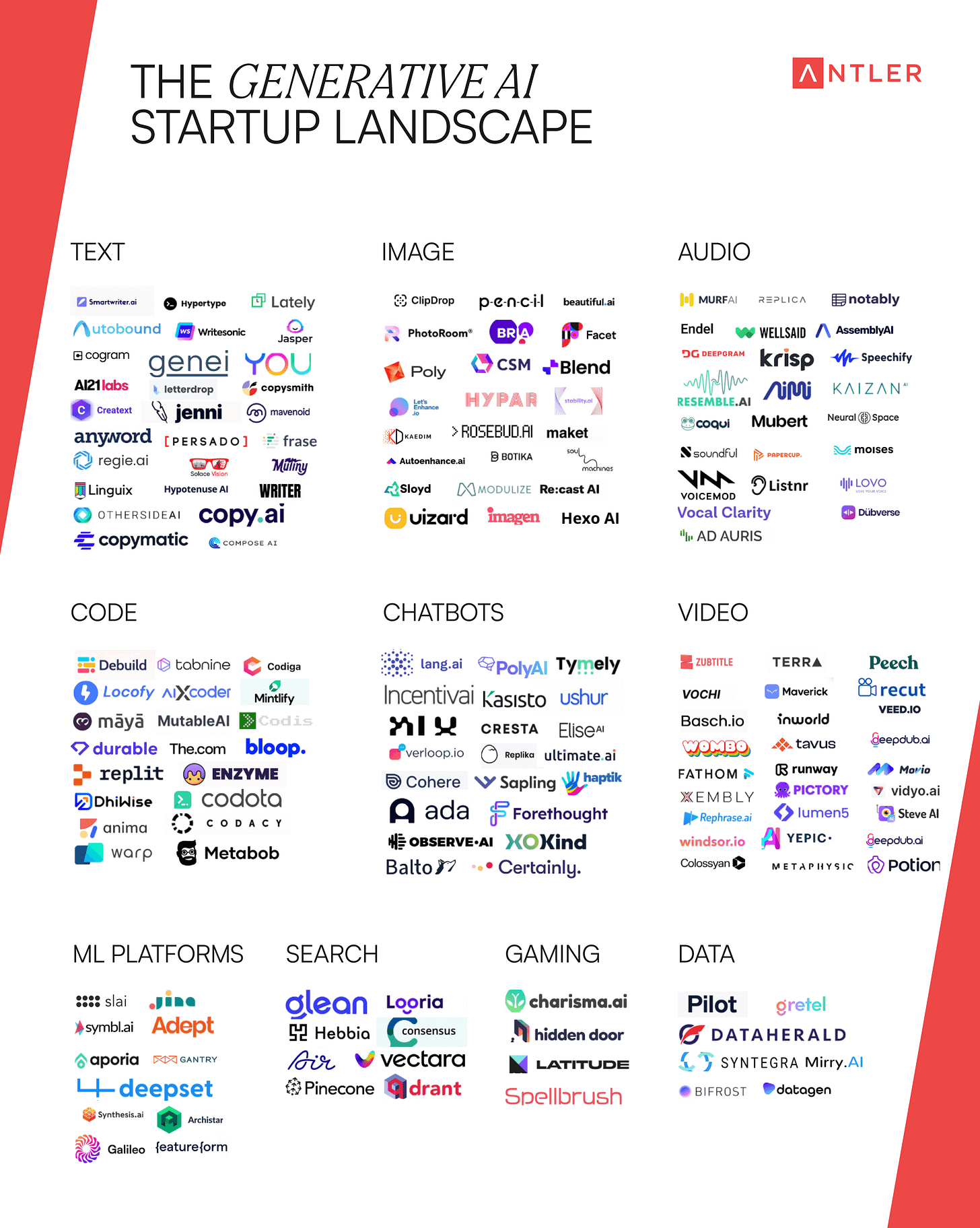

AI application providers

The space is getting crowded with lots of new players constantly added. Below is an overview in different sectors.

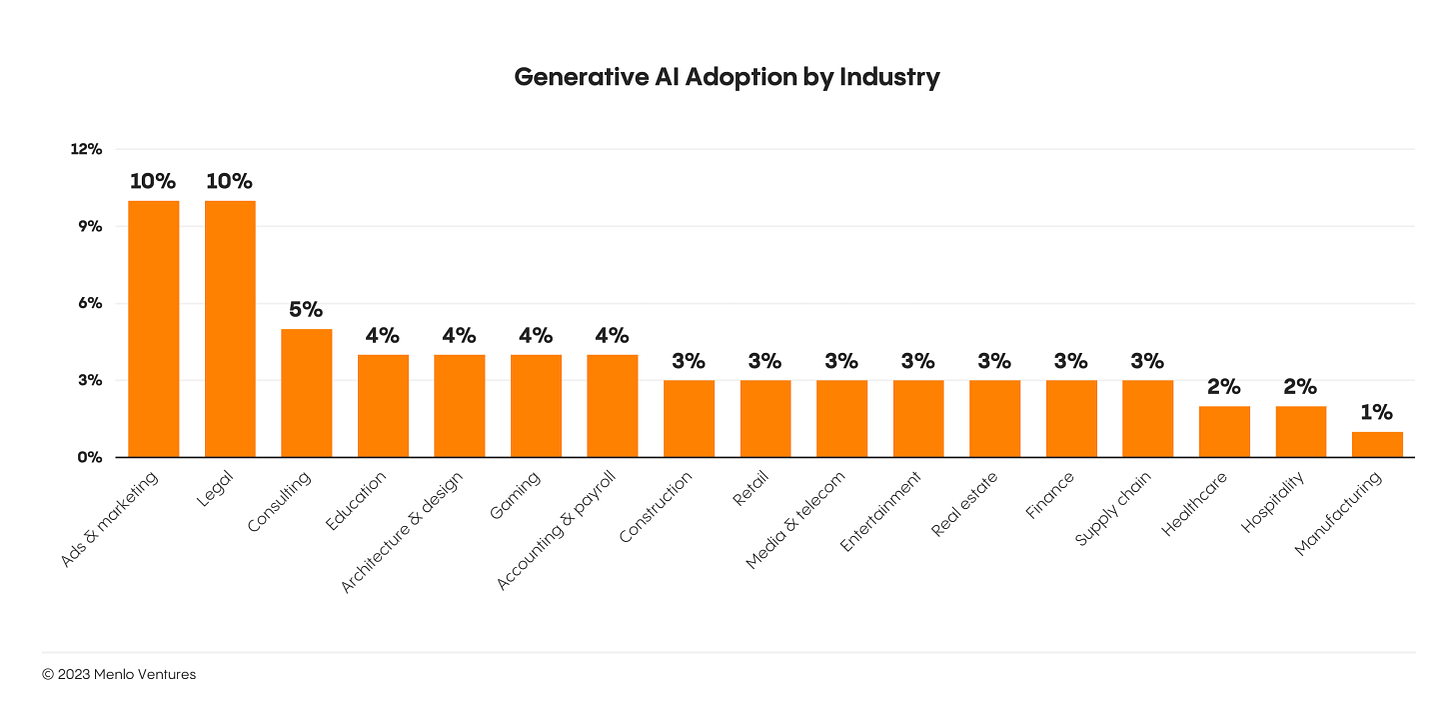

Vertical applications: We see a surge in vertical SaaS companies with industry-specific applications in construction (Procore), Benchling (life sciences) and Eleos Health (medical). The adoption is still low though, with ads & marketing and legal applications having the highest adoption rates.

Horizontal applications: The integration of AI into our daily work, providing access to all digital interactions like calls, emails, and searches, promises significant productivity gains, balancing the trade-off between privacy and efficiency. This trend is already evident in specific applications like Gong or Zoom AI Companion for recording sales calls. As AI tools become more sophisticated and trusted, they will seamlessly enhance our work, evolving into indispensable collaborators. Concurrently, the ecosystem will adapt, establishing security standards akin to SOC-2 compliance.

We also see companies such as Adept and Lindy, creating AI agents that allow employees to reliably delegate repetitive and manual tasks such as customer support, HR support, recruiting, sales.

Conclusion

Despite the wow effect of generative AI technological breakthroughs, the fundamentals of investment remain consistent, even for AI companies. Success lies on the ability to maintain a position within the value chain, secure a profitable niche, and essentially build a strong business moat. As an example, when OpenAI released its GPT fine-tuning feature a few weeks ago, the system did not have moat and practically vanished after the announcement of the feature. This concept underscores the importance of creating and defending a competitive advantage in the industry. Crucially, this is not a 'one-size-fits-all' strategy; rather, it requires a nuanced and selective approach, avoiding the temptation to use a single solution for all challenges.

Closing with Warren Buffett’s words:

What we're trying to do, is we're trying to find a business with a wide and long-lasting moat around it, surround -- protecting a terrific economic castle with an honest lord in charge of the castle… we're trying to find is a business that, for one reason or another -- it can be because it's the low-cost producer in some area, it can be because it has a natural franchise because of surface capabilities, it could be because of its position in the consumers' mind, it can be because of a technological advantage, or any kind of reason at all, that it has this moat around it. But we are trying to figure out what is keeping -- why is that castle still standing? And what's going to keep it standing or cause it not to be standing five, 10, 20 years from now. What are the key factors? And how permanent are they? How much do they depend on the genius of the lord in the castle?

🚀 Stay tuned for exciting announcements from Hatch Labs in the next post!