Understanding and utilizing a Confusion Matrix for Product Managers

Sample Interview Question #1

In the series of Hatch Labs Academy articles for AI/ML Product Managers, we will explore sample interview questions with

.Exciting reminder!

The Hatch Labs Academy is pleased to organize a Meet & Greet session with the instructors: Eva Agapaki and Anjaly Joseph this week on Dec 19th @ 4pm EST / 1pm PST. Click below to register!

Sample Interview Question #1: What is a confusion matrix and as a Product Manager (PM) how do you use it?

A confusion matrix is a tool used in the world of Machine Learning (ML) specifically for classification tasks. It is essentially a matrix that summarizes the predictions made by the model compared to the actual ground truth labels.

Example 1: In an Ads rendering process, a model is created to classify ads as blank ads vs non blank ads: The confusion matrix would then be a 2x2 cells as:

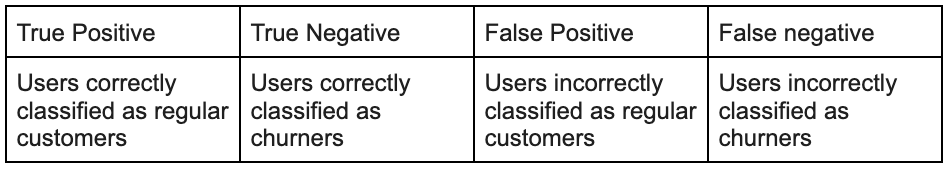

Example 2: On an e-commerce platform, a model is designed to identify customers vs churners based on certain factors . The confusion matrix would then be as below:

By analyzing the values in the matrix, a PM and stakeholders can get valuable insights into the model's performance:

Accuracy: Overall percentage of correct predictions (TP + TN) / total predictions.

Precision: Proportion of predicted positives that are actually positive (TP / (TP + FP)).

👉 Answers the question: How many predictions are actually correct?

Recall: Proportion of actual positives that were correctly predicted (TP / (TP + FN)).

👉 Answers the question: How many predictions did we miss out?

Specificity: Proportion of actual negatives that were correctly predicted (TN / (TN + FP)).

Note: It is important to understand that we cannot maximize both precision and recall metrics! We need to choose one over the other based on the effect and cost it has on our product goals.

Example from Instagram reels: How can we measure the number of irrelevant reels which are shown to users before they churn?

Success metric: virality

Guardrail: churn

Metric chosen: recall

As a PM, I apply confusion matrices in various ways:

Evaluate model performance: Understand strengths and weaknesses of the model

Identify data imbalances: Look for disparities in the matrix that indicate biases in the training data.

Prioritize model improvements: Focus on areas with the highest FP or FN values, and probably correct through targeted data collection or model retraining.

Communicate model effectiveness: Use the confusion matrix to explain model's performance to stakeholders

Stay tuned as we explore more technical generative AI concepts as well as AI/ML focused interview questions in the upcoming posts!