Core AI for PMs: Generative AI Product Glossary II

Understand generative AI model evaluation, improvement strategies and lead product discussions

In this post, we continue with two important generative AI topics, where PMs can have a significant impact in product discussions:

LLM evaluation strategies

LLM enhancement strategies

1. LLM evaluation strategies

LLM model evaluation is a multi-dimensional problem, which is dependent on the stage of LLM development.

Below, we define four main stages of LLM product development: early iteration (research), pre-launch, production and post-launch. There are four general LLM model evaluation categories that we need to consider:

🔢 Quantitative performance: This domain encompasses a wide array of metrics crucial for understanding the performance and effectiveness of LLMs, as informed by discussions with research teams. Examples include the Perplexity Score, Bilingual Evaluation Understudy (BLEU) and Recall-Oriented Understudy for Gisting Evaluation (ROUGE), among others.

🩺 Operational & health: This category encompasses resource efficiency, latency, and various health indicators. Key among these indicators are the frequency of error rates arising from security or content filtering issues, and the model's performance under differing levels of user demand.

👥 User engagement: Typically, we delineate these features at the pre-launch stage, once the user interface has been defined. Common examples include the quality of user interactions and user retention. These factors are instrumental in evaluating and enhancing the overall user experience, leading to sustained engagement.

🏛 AI governance: It is crucial to establish certain metrics to ensure the development of an ethical, fair, and safe product. Typically defined during the pre-launch phase of AI products, these metrics serve as a framework for evaluating various critical aspects. Common areas of focus include model biases, privacy considerations, and resilience against adversarial attacks.

⚖️ A/B testing: These metrics are the golden standard for production and post-launch phases. This is a vast field, dealing with user control group testing, controlled feature rollouts for improving user engagement and revenue, and much more. We will deep dive into A/B testing in a later post.

We are going to analyze each category in the sections that follow.

🔢 Quantitative performance

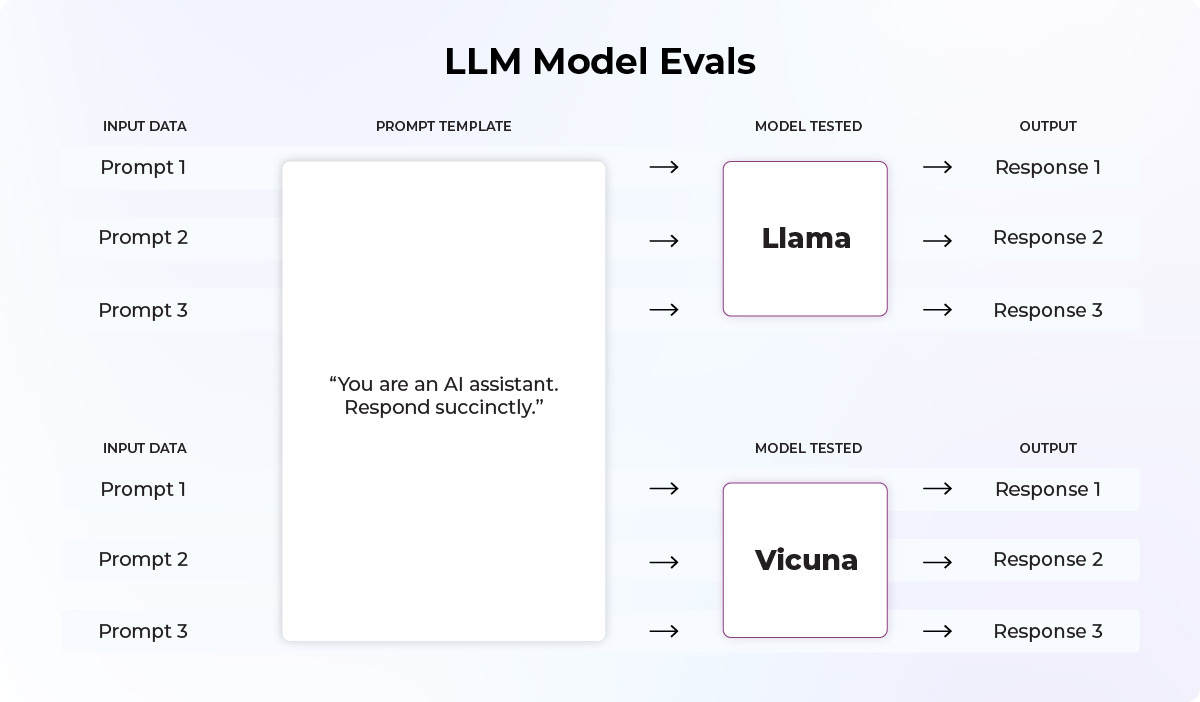

The evaluation of LLMs involves a systematic pipeline that tests a consistent dataset—comprising prompts as input—across various models to quantitatively assess their performance. A suite of open-source tools, including the OpenAI Eval library and the Hugging Face leaderboard, provide robust frameworks for this purpose.

It is important to consider that there is no library/leaderboard that can fit all use cases and domains. There needs to be alignment with our north star and guardrail metrics as well as mutual understanding between the product and research teams, in order to define an LLM quantitative performance strategy. As the product becomes more mature across the subsequent product development phases, these requirements will likely need to be re-evaluated.

The most common evaluation frameworks/benchmarks are MMLU (language understanding), HellaSwag (commonsense reasoning), WinoGrande (reasoning around pronoun resolution), HumanEval (Python coding tasks), DROP (reading comprehension & arithmetic), SuperGLUE (language understanding).

There are also aggregate evaluation benchmarks such as the Mosaic Eval Gauntlet, which includes 34 benchmarks organized into categories of competency.

This is an ongoing research area and multiple benchmarks will continue to emerge, so it is important to stay up-to-date with the state-of-the-art LLM evals frameworks.

🩺 Operational & health

Let’s break down operational LLM evals into three broad categories:

Resource efficiency: These metrics provide insights on how the system’s resources are being used. If we are hosting the LLMs ourselves, we can look at CPU/GPU utilization dashboards, as well as server costs. If we are using an API service, it is important to measure the total number of tokens used and track costs. You can refer to our previous post on more information about what tokens are. Other important things to look at include tracking whether the API service is currently overloaded.

Latency: Latency is the time it takes the model to provide an output after receiving an input (a user’s prompt). It can be a make-or-break factor for the user experience. OpenAI has developed best practices on improving latency issues when using their API here.

Environmental impact: We discussed in a previous post that LLMs have a large carbon footprint. For example, the carbon footprint of training BERT (one of the early models developed by Google) is equivalent to that of taking a transatlantic flight! (Source)

Health metrics provide insights into the overall health and functionality of the model. These are usually considered guardrail metrics and could include:

Uptime measures the amount of time the model remains operational without interruptions (e.g., % prompts with 400 HTTP error). Some reasons that may result in interruptions other than engineering issues are user prompts that are considered filtered content and are blocked by LLM providers (example by Azure Open AI here).

Load capacity measures the LLM model’s performance under varying levels of user demand. Example metric can be requests per second for the LLM.

Anomaly detection accounts for the frequency of errors or issues encountered and the identification of unusual patterns.

👥 User engagement

We, as PMs, should focus on understanding key user satisfaction metrics, as well as the type and quality of user-LLM interactions. Common categories of user engagement metrics include:

LLM usage frequency: We can measure Daily Active Users (DAU), session frequency (number of sessions a user initiates an LLM in a given timeframe).

Engagement metrics: Metrics include session duration, user queries per session, words per query, LLM conversation length.

User satisfaction: Metrics include feedback ratings, success rate of user queries, number of follow-up queries.

User retention: Metrics include retention rate of new users to a specific LLM feature, new users who use an LLM feature in their first session.

Content interaction: We need to look at scenarios both from the creator and consumer side:

Creator satisfaction: Measure content reach (number of users, number of documents edited with the LLM), content quality, effort creating content.

Consumer satisfaction: Measure content reach (number of users, number of documents read that were edited with the LLM), content quality (number of consumption actions e.g., sharing, commenting, reviewing AI-generated content).

Specialized scenarios: every use case & product is different and we recommend adding metrics on a case-by-case scenario. For example, for collaboration scenarios, we need to measure improvement in productivity.

🏛 AI governance

The landscape of AI governance is complex, with various stakeholders contributing significantly to its shape and direction. Notably, the recent Executive Order issued by the White House presents a critical stride in policymaking, including the formation of new standards for AI safety, security and privacy, while the AI Safety Summit serves as a pivotal forum for dialogue and strategy formulation among experts.

There are multiple key considerations for developing LLMs. Some of these include:

fairness (mitigate biases on race, gender, age),

interpretability (explain why an LLM made a specific choice),

safety (ensure the LLM operates reliably and without causing harm),

privacy (respect user data privacy regulations),

accountability (trace decisions back to particular datasets and training methods).

As an example of a safety consideration, let’s consider robustness to adversarial attacks. What does this mean? “Jailbreak” prompts or adversarial attacks are prompts that are designed to intentionally confuse the LLM into producing content that it normally wouldn’t, such as harmful or illegal content. The term jailbreak here draws an analogy from the world of electronics and software, where jailbreaking a device means unlocking it to allow unauthorized access or behavior.

There are multiple AI Governance frameworks and it is important to pick the right one for each use case. A simple AI safety framework was developed by the Singaporean government. This framework gives deployment recommendations based on the severity of harm that AI can cause in a particular application, and the probability of harm happening in this application. The three possible recommendations are: 1) A human should be in the loop, closely supervising the AI’s operation, 2) A human should be “over the loop”, managing escalations and high level operation of the AI, or 3) No human is required and the AI can operate autonomously. More information about the framework can be found here.

In the second part of this post, we’ll explore strategies to improve LLM performance if our LLM evals are below expectations.

2. LLM enhancement strategies

Zero-shot

Zero-shot LLMs are a quick, cost-effective way for businesses to leverage the power of foundational LLMs across a multitude of tasks without the need for additional training. In other words, the LLM is asked to solve a task that it was not directly trained on. For example, GPT-3 is capable of various tasks like sentiment analysis without the need for task-specific training.

Prompt:

Improve the writing of this paragraph: "Running quickly, the lunch was eaten by a dog, which it was very hungry and the sandwich, which I had made it for my lunch which was gone."

GPT-4:

A hungry dog swiftly devoured the sandwich I had made for my lunch, leaving nothing behind.

In-context learning

The simplest way to improve the performance of zero-shot LLMs is to provide some relevant information inside the prompt. This equips the model with task-specific knowledge without the need to re-train it. For example, we can provide up-to-date information that the model was not trained on, or a task that it hasn’t seen in its training set.

Prompt:

Give me a bullet point critique of the White House AI executive order signed in October 2023. More information: <paste news articles about the executive order>

GPT-4:

Certainly, here's a critique of the Executive Order in bullet points, considering the potential strengths and challenges:

Strengths: …

Challenges: …

One-shot / few-shot

In one-shot learning, LLM models are trained to perform new tasks with only a single example (or a few examples) during training. For example, we can provide 3 training examples for translating english to french and then prompt the trained model.

Training examples:

Translate to French: sea otter → loutre de mer

Translate to French: peppermint → menthe poivree

Translate to French: plush girafe → girafe peluche

….

Prompt:

Translate to French: cheese →

Response:

fromage

Instruction tuning

Instruction tuning is a technique used to teach LLMs to follow instructions (e.g. InstructGPT) or a particular response format, such as dialogue format (e.g. ChatGPT).

Fine tuning

Fine-tuning adjusts an LLM on specific tasks by training it on a smaller, focused dataset. For example, a translation service company could fine-tune an LLM to better understand legal documents, which would lead to more accurate and less generic translations. Fine-tuning can turn a generalist AI chatbot into a specialist in law practice with 20 years of experience.

Similarly, an e-commerce business could fine-tune an LLM to better understand product features, helping it create appealing product descriptions that could boost sales and engagement. In essence, fine-tuning helps businesses customize LLMs to meet their unique needs and perform better in their specific tasks.

Retrieval-augmented generation (RAG)

RAG is a process that consists of two steps:

Retrieve relevant information to the user’s prompt from a collection of data sources and then

Ask the LLM to generate a response, given the additional information retrieved.

In other words, RAG allows to look up information (this step is called semantic search) to automatically provide better, more informed answers to user queries.

We will deep dive into RAG and other enhancement strategies in a following post!